Unit Overview

|

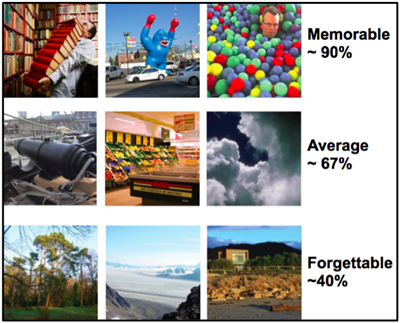

What makes an image memorable? Using insights from perceptual experiments, fMRI studies, and computational modeling, Aude Oliva has identified some key factors that determine visual memorability. (Image based on Isola, Phillip, Jianxiong Xiao, Devi Parikh, Antonio Torralba, and Aude Oliva. “What Makes a Photograph Memorable?” IEEE Trans. Pattern Anal. Mach. Intell. 36, no. 7 (July 2014): 1469–1482. From author’s final manuscript, license CC BY-NC-SA.) |

How do we obtain a rich understanding of the world from visual input? What visual cues enable us to recognize objects from their structure or texture, or to remember objects and scenes that we have encountered before? What is the future for intelligent systems that can drive a car or assist the visually impaired? This unit explores these questions from the perspectives of perception and cognition, brain imaging, and the engineering of intelligent systems.

From very rudimentary visual abilities present at birth, infants learn to recognize complex objects such as hands and how to detect the direction of gaze of a caregiver. Shimon Ullman’s first lecture shows how such capabilities can be learned from a stream of natural videos and without supervision.

Shimon Ullman’s second lecture explores the minimal configurations of image content needed to recognize an object category, revealing a human capability that far surpasses that of current recognition systems and yielding insights into the brain mechanisms underlying visual recognition.

Understanding what makes an image memorable can shed light on the representations of visual knowledge in the brain and neural basis of memory loss, and is important for applications such as data visualization and image retrieval. From Aude Oliva, you will learn about the visual cues that govern memorability, revealed from behavioral experiments, computational models, and brain imaging studies.

Guest speaker Eero Simoncelli presents a physiologically inspired model of the analysis of visual textures by the ventral pathway of the brain. The synthesis of texture metamers, stimuli that are physically different but appear the same to a human observer, provide a powerful tool for probing the underlying brain mechanisms.

We are rapidly approaching a time of fully autonomous vehicles and intelligent systems to assist the blind. Guest speaker Amnon Shashua shows how advanced computer vision technology created by Mobileye will change transportation, and how wearable devices created by OrCam can profoundly impact the lives of the visually impaired.

Unit Activities

Useful Background

- Introductions to machine learning, neuroscience, cognitive science

Videos and Slides

Further Study

Additional information about the speakers’ research and publications can be found at their websites:

- Aude Oliva, Computational Perception and Cognition Lab, MIT

- Amnon Shashua, Hebrew University of Jerusalem; also see industry websites OrCam, Mobileye

- Eero Simoncelli, Laboratory for Computational Vision, NYU

- Shimon Ullman, MIT and The Weizmann Institute

Bylinskii, Z., P. Isola, et al. “Intrinsic and Extrinsic Effects on Image Variability.” (PDF - 4.8MB) Vision Research 116 Part B (2015): 165–78.

Cichy, R. M., A. Khosla, et al. “Dynamics of Scene Representations in the Human Brain Revealed by Magnetoencephalography and Deep Neural Networks.” NeuroImage (2016). (in press)

Freeman, J., and E. P. Simoncelli. “Metamers of the Ventral Stream.” (PDF - 2.0MB) Nature Neuroscience 14, no. 9 (2011): 1195–1201.

Freeman, J., C. M. Ziemba, et al. “A Functional and Perceptual Signature of the Second Visual Area in Primates.” (PDF - 1.5MB) Nature Neuroscience 16, no. 7 (2013): 974–81.

Portilla, J., and E. P. Simoncelli. “A Parametric Texture Model Based on Joint Statistics of Complex Wavelet Coefficients.” (PDF - 2.0MB) International Journal of Computer Vision 40, no. 1 (2000): 49–71.

Ullman, S., L. Assif, et al. “Atoms of Recognition in Human and Computer Vision.” (PDF) Proceedings of the National Academy of Sciences 113, no. 10 (2016): 2744–49.

Ullman, S., D. Harari, et al. “From Simple Innate Biases to Complex Visual Concepts.” (PDF - 2.5MB) Proceedings of the National Academy of Sciences 109, no. 44 (2012): 18215–20.

Zhou, B., A. Khosla, et al. “Object Detectors Emerge in Deep Scene CNNs.” (PDF - 7.0MB) International Conference on Learning Representations (2015).