Unit Overview

|

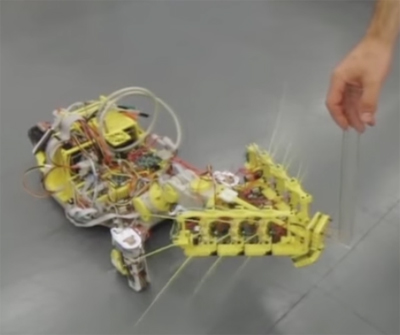

Robotics researchers at the University of Sheffield, led by Tony Prescott, have built a robot that senses the environment with moving whiskers, incorporating a model of whisking behavior in rodents and observations of sensory-motor systems in the mammalian brain. (Image courtesy of Martin Pearson and Ben Mitchinson, used with permission.) |

The challenges of building robots that can sense the environment, navigate through the world, manipulate objects, and learn from experience, may shed light on how these tasks are performed by biological systems. A deeper understanding of sensory-motor processing in biological systems may also inform the design of more intelligent robots. This unit explores some of the successes, challenges, and insights gained from efforts to build intelligent humanoid robots, self-driving vehicles, and robots that construct a model of the spatial structure of objects and surfaces in the environment through intelligent sensing and exploration.

Russ Tedrake describes important research advances that emerged from the design of MIT’s entry in the 2012 DARPA Robotics Challenge to “develop ground robots that perform complex tasks in dangerous, degraded human-engineered environments,” which was based on a humanoid robot designed by Boston Dynamics, Inc.

John Leonard addresses the challenges faced in the design of self-driving vehicles, and lessons learned from MIT’s entry into the 2007 DARPA Urban Challenge. This lecture also examines the problem of Simultaneous Localization and Mapping (SLAM) by a mobile robot that senses and maps the 3D layout of its environment through long-term exploration.

From Tony Prescott, you will learn about the sensory-motor pathways in the brain, and how the construction of a whiskered robot can shed light on the mechanisms by which rodents use their whiskers to derive information about the structure of their environment and use that information to navigate.

Stefanie Tellex explores the benefits of human-robot collaboration, examining how robots with limited sensing and grasping capabilities can learn to grasp complex objects of the sort found in a typical household, and how humans can communicate actions and object knowledge to a robot through language and gesture.

The iCub team at the Italian Institute of Technology developed an open-source robot platform, the iCub, to enable advancements in the design of intelligent humanoid robots worldwide. Giorgio Metta discusses the long-term vision of the iCub team and overall design of the iCub platform.

Members of the iCub team, Carlo Ciliberto, Alessandro Roncone, Raffaello Camoriano, and Giulia Pasquale, describe the design of the iCub robot in more detail, and present results of research that uses the iCub to study the integration of visual and tactile information, large-scale incremental learning, and teaching the iCub to recognize objects.

A panel of robotics experts led by Patrick Winston discuss topics such as general design principles that emerge from current work in robotics, the insights gained from building robots for the study of biological systems, and vice versa, and the role of DARPA grand challenges in advancing robotics research.

Unit Activities

Useful Background

- Introduction to machine learning

Videos and Slides

Further Study

Additional information about the speakers’ research and publications can be found at their websites:

- John Leonard, Marine Robotics Group, MIT

- Giorgio Metta, Italian Institute of Technology

- Tony Prescott, Sheffield Centre for Robotics, Sheffield University

- Russ Tedrake, Robot Locomotion Group, MIT

- Stefanie Tellex, Brown University

- iCub Team: Carlo Ciliberto, Alessandro Roncone, Raffaello Camoriano, Giulia Pasquale

Learn more about the iCub project, including recent publications, at the iCub humanoid robot project site within the website for the Italian Institute of Technology.

Fallon, M., S. Kuindersma, et al. “An Architecture for Online Affordance-Based Perception and Whole-Body Planning.” (PDF - 21.5MB) Journal of Field Robotics 32, no. 2 (2014): 229–54.

Grant, R. A., A. L. Sperber, et al. “The Role of Orienting in Vibrissal Touch Sensing.” Frontiers in Behavioral Neuroscience 6, no. 39 (2012): 1–12.

Kuindersma, S., R. Deits, et al. “Optimization-Based Locomotion Planning, Estimation, and Control Design for the Atlas Humanoid Robot.” (PDF - 30.6MB) Autonomous Robots 40, no. 3 (2016): 429–55.

Leonard, J. “A Perception-Driven Autonomous Urban Vehicle.” (PDF - 1.8MB) Journal of Field Robotics 25, no. 10 (2008): 724–74. (28 authors)

Oberlin, J., and S. Tellex. “Autonomously Acquiring Instance-Based Object Models from Experience.” (PDF - 1.2MB) International Symposium on Robotics Research (2015).

Pearson, M. J., C. Fox, et al. “Simultaneous Localisation and Mapping on a Multi-Degree of Freedom Biomimetic Whiskered Robot." (PDF - 2.0MB) Proceedings IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe (2013): 586–92.

Tellex, S., R. A. Knepper, et al. “Asking for Help Using Inverse Semantics.” (PDF - 2.6MB) Proceedings Robotics: Science and Systems (Berkeley, CA) (2014).

Whelan, T., M. Kaess, et al. “Real-Time Large-Scale Dense RGB-D SLAM with Volumetric Fusion.” The International Journal of Robotics Research 34, no. 4–5 (2015): 598–626.