Unit Overview

|

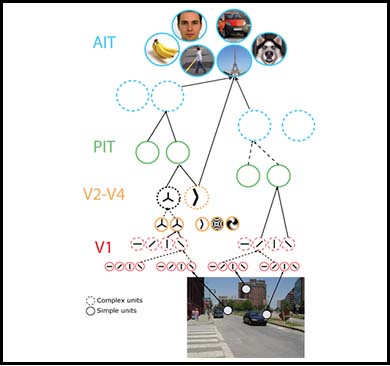

Tomaso Poggio and his colleagues have developed a model of the early processing stages in the ventral visual pathway of the brain, which may underlie our ability to recognize object categories from visual input in a brief flash of less than 100 milliseconds. (Courtesy of Tomaso Poggio and Thomas Serre. From “Models of visual cortex.” Scholarpedia 8 no. 4 (2013): 3516. License CC BY-NC-SA.) |

Research on learning in deep networks has led to impressive performance by machines on tasks such as object recognition, but a deep understanding of the behavior of these networks and why they perform so well remains a mystery. In this unit, you will first learn about a model of rapid object recognition in visual cortex that resembles the structure of deep networks. You will then learn some of the theory behind how the structural connectivity, complexity, and dynamics of deep networks govern their learning behavior.

Tomaso Poggio describes a theory of processing in the ventral pathway of the brain that solves the challenging problem of recognizing objects despite variations in their visual appearance due to geometric transformations such as translation and rotation.

The guest lecture by Surya Ganguli shows how insights from statistical mechanics applied to the analysis of high-dimensional data can contribute to our understanding of how functions such as object categorization emerge in multi-layer neural networks.

Haim Sompolinsky explores the theoretical role of common properties of neural architectures in biological systems, in learning tasks such as classification. These properties include the number of stages of the neural network, compression or expansion of the dimensionality of the information, the role of noise, and presence of recurrent or feedback connections.

Unit Activities

Useful Background

- Introductions to statistics and machine learning, including deep learning networks

Videos and Slides

Further Study

Additional information about the speakers’ research and publications can be found at their websites:

- Surya Ganguli, Neural Dynamics and Computation Lab, Stanford

- Tomaso Poggio, MIT

- Haim Sompolinsky, Harvard and Neurophysics Lab, Hebrew University of Jerusalem

Advani, M., and S. Ganguli. “Statistical Mechanics of High-Dimensional Inference.” (PDF) (2016).

Anselmi, F., J. Z. Leibo, et al. “Unsupervised Learning of Invariant Representations.” Theoretical Computer Science 633 (2016): 112–21.

Babadi, B., and H. Sompolinsky. “Sparseness and Expansion in Sensory Representations." Neuron 83 (2014): 1213–26.

Gao, P., and S. Ganguli. “On Simplicity and Complexity in the Brave New World of Large-Scale Neuroscience.” (PDF) Current Opinion in Neurobiology 32 (2015): 148–55.

Poggio, T. “Deep Learning: Mathematics and Neuroscience.” (PDF - 1.2MB) Center for Brains Minds & Machines Views & Reviews (2016).

Saxe, A., J. McClelland, et al. “Learning Hierarchical Category Structure in Deep Neural Networks.” (PDF) Proceedings 35th Annual Meeting of the Cognitive Science Society (2013): 1271–76.

Serre, T., G. Kreiman, et al. “A Quantitative Theory of Immediate Visual Recognition.” (PDF) Progress in Brain Research 165 (2007): 33–56.

Sompolinsky, H. “Computational Neuroscience: Beyond the Local Circuit." (PDF) Opinion in Neurobiology 2014 Sompolinsky.pdf) Current Opinion in Neurobiology 25 (2014): 1–6.