Unit Overview

|

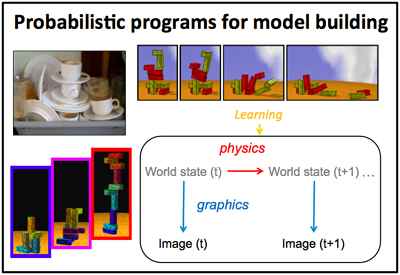

Josh Tenenbaum and colleagues propose that our intuitions about properties like the stability of a stack of objects, may derive from “probabilistic programs” in our heads that can simulate, with some uncertainty, the physics that governs how objects behave in space and time. Aspects of these programs are learned from infancy, as a child interacts with the world. (Image courtesy of Josh Tenenbaum, used with permission.) |

How do we make intelligent inferences about objects, events, and relations in the world, from a quick glance? From infancy to adulthood, how do we learn new concepts and reason about novel situations, from little experience? In this unit, Josh Tenenbaum introduces a framework for addressing these questions based on the creation of generative models of the physical and social worlds that enable probabilistic inference about objects and events.

Part 1 introduces the concept of a generative model, and how such a model can provide causal explanations of the world, how uncertainty can be embodied in probabilities incorporated in the model, and how it can guide inferences used to plan and to solve problems.

Part 2 illustrates the framework through problem domains in which learning and inference are captured by probabilistic generative models in which knowledge is organized into graphical structures that are learned from experience. You will see how the framework can be used to model how humans learn new word concepts, infer likely causes of disease, and infer properties of objects.

The framework described in Parts 1 and 2 has limitations when to comes to common-sense reasoning about physical and social behaviors. Greater reasoning power and flexibility can be achieved by building knowledge into a probabilistic program, much like a computer program, that can simulate behavior in a way that incorporates uncertainty about the world.

Part 3 illustrates the idea of model building through the creation of probabilistic programs, through examples of intuitive physics, inferring properties of a human face or body from a single visual image, and making inferences about planning, beliefs, and desires.

Unit Activities

Useful Background

- Introduction to cognitive science

- Probability and statistics

- Introduction to machine learning, including probabilistic inference methods

Videos and Slides

Further Study

Additional information about the speaker’s research and publications can be found at his website:

See the tutorial by Tomer Ulman on the implementation of probabilistic models using the Church programming language.

Baker, C. L., R. Saxe, et al. “Action Understanding as Inverse Planning.” (PDF) Cognition 113 (2009): 329–49.

Battaglia, P. W., J. B. Hamrick, et al. “Simulation as an Engine of Physical Scene Understanding.” (PDF - 2.0MB) Proceedings of the National Academy of Sciences 110, no. 45 (2013): 18327–32.

Goodman, N. D., and J. B. Tenenbaum (e-book). Probabilistic Models of Cognition.

Kulkarni, T., P. Kohli, et al. “Picture: An Imperative Probabilistic Programming Language for Scene Perception.” (PDF - 2.4MB) Proceedings IEEE Conference Computer Vision and Pattern Recognition (2015): 4390–99.

Perfors, A., J. B. Tenenbaum, et al. “A Tutorial Introduction to Bayesian Models of Cognitive Development.” (PDF) Cognition 120 (2011): 302–21.

Tenenbaum, J. B., C. Kemp, et al. “How to Grow a Mind: Statistics, Structure, and Abstraction.” (PDF) Science 331 (2011): 1279–85.